Large scale analyses#

This page is meant to be also a tutorial on how to perform large scale analyses with protopipe.

Requirements#

protopipe (Getting started)

GRID interface (Interface to the DIRAC grid),

be accustomed with the basic pipeline workflow (Pipeline),

be accustomed with the scripts provided by protopipe (Scripts),

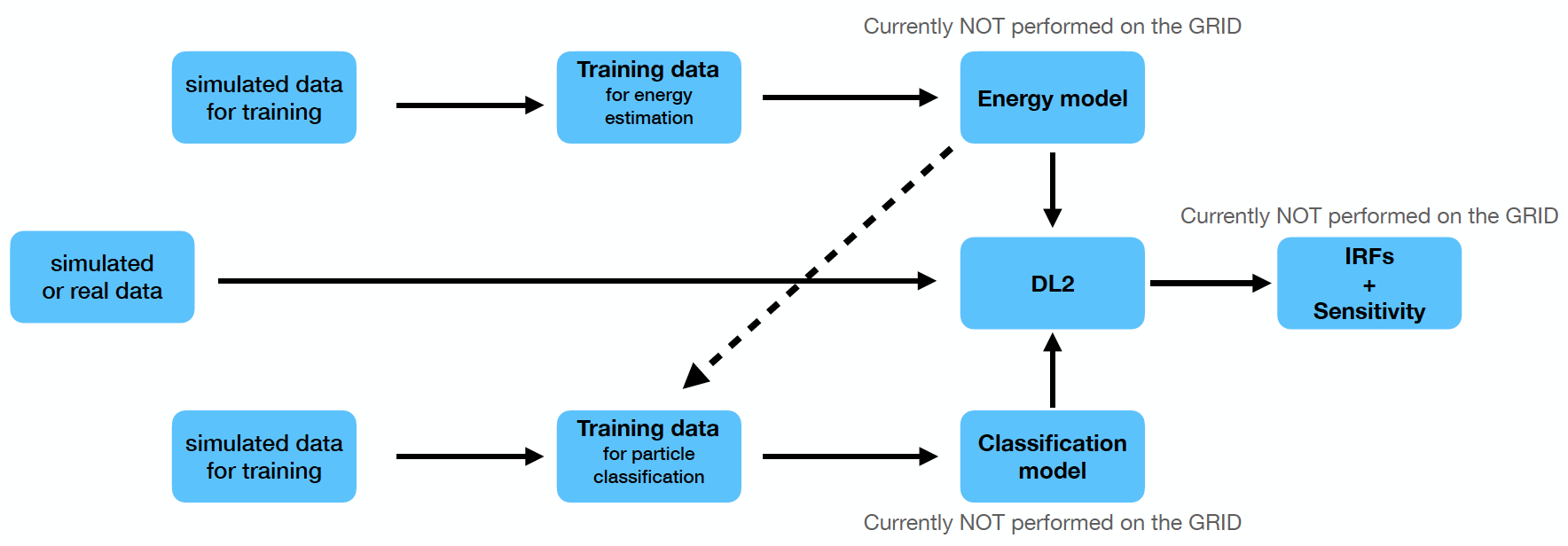

Standard analysis workflow with protopipe.#

Usage#

Preliminary operations#

Get a proxy with

dirac-proxy-initChoose the simulation production that you want to work on (this is reported e.g. in the CTA wiki)

use

cta-prod-dump-datasetto obtain the original lists of simtel files for each particle typeuse

protopipe-SPLIT_DATASETto split the original lists depending on the chosen analysis workflow

Warning

For the moment, only the standard analysis workflow (stored under protopipe_grid_interface/aux) has been tested.

Note

Make sure you are using a protopipe-CTADIRAC-based environment, otherwise you won’t be able to interface with DIRAC.

To monitor the jobs you can use the DIRAC Web Interface

Start your analysis#

Setup analysis

Use

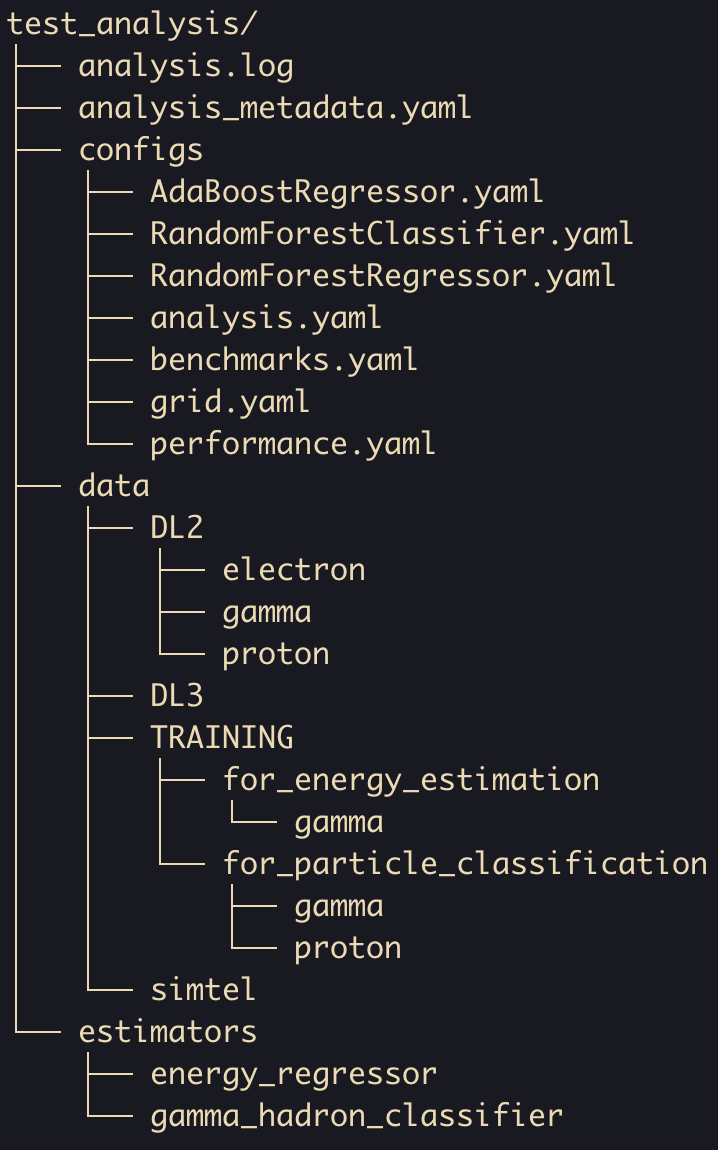

protopipe-CREATE_ANALYSISto create a directory tree, depending on your setup.The script will store and partially edit for you all available configuration files under the

configsfolder. It will also create ananalysis_metadata.yamlfile which will store the basic information regarding your analysis on the grid and on your machine.Note

To reproduce or access the analysis data of someone else on DIRAC it will be sufficient to modify the metadata key

analyses_directoryreferred to your local path.To reproduce or access the analysis data of someone else on DIRAC it will be sufficient to modify the metadata key

analyses_directoryreferred to your local path.

Obtain training data for energy estimation

edit

grid.yamlto use gammas without energy estimationprotopipe-SUBMIT_JOBS --analysis_path=[...]/test_analysis --output_type=TRAINING ....once the jobs have concluded and the files are ready you can use

protopipe-DOWNLOAD_AND_MERGEuse

protopipe-BENCHMARKto check the properties of the data sample you obtained

Build the model for energy estimation

edit the configuration file of your model of choice

use

protopipe-MODELwith this configuration fileuse

protopipe-BENCHMARKto check the performance of the generated modelsuse

protopipe-UPLOAD_MODELSto upload models and configuration file to your analysis directory on the DIRAC File Catalog

Obtain training data for particle classification

edit

grid.yamlto use gammas with energy estimationprotopipe-SUBMIT_JOBS --analysis_path=[...]/test_analysis --output_type=TRAINING ....once the jobs have concluded and the files are ready you can use

protopipe-DOWNLOAD_AND_MERGErepeat the first 3 points for protons

use

protopipe-BENCHMARKto check the quality of energy estimation on this data sample

Build a model for particle classification

edit

RandomForestClassifier.yamluse

protopipe-MODELwith this configuration fileuse

protopipe-BENCHMARKto check the performance of the generated modelsuse

protopipe-UPLOAD_MODELSto upload models and configuration file to your analysis directory on the DIRAC File Catalog

Get DL2 data

Execute points 1 and 2 for gammas, protons, and electrons separately.

protopipe-SUBMIT_JOBS --analysis_path=[...]/test_analysis --output_type=DL2 ....once the jobs have concluded and the files are ready you can use

protopipe-DOWNLOAD_AND_MERGEuse

protopipe-BENCHMARKto check the quality of the generated DL2 data

Estimate the performance (protopipe enviroment)

edit

performance.yamllaunch

protopipe-DL3-EventDisplaywith this configuration fileuse

protopipe-BENCHMARKto check the quality of the generated DL3 data

Troubleshooting#

Issues with the login#

After issuing the command ``dirac-proxy-init`` I get the message “Your host clock seems to be off by more than a minute! Thats not good. We’ll generate the proxy but please fix your system time” (or similar)

This can happen if you are working from a container. Execute these commands:

systemctl status systemd-timesyncd.servicesudo systemctl restart systemd-timesyncd.servicetimedatectl

Check that,

System clock synchronized: yessystemd-timesyncd.service active: yes

After issuing the command ``dirac-proxy-init`` and typing my certificate password the process starts pending and gets stuck

One possible reason might be related to your network security settings.

Some networks might require to add the option -L to dirac-proxy-init.

Issues with the download#

While downloading data I get “UTC Framework/API ERROR: Failures occurred during rm.getFile”

Something went wrong during the download phase, either because of your network connection (check for possible instabilities) or because of a problem on the server side (in which case the solution is out of your control).

The recommended approach is to download data using protopipe-DOWNLOAD_AND_MERGE.

This script enables by the default a backup download based on an rsync-type command.

Warning

There is a known bug in DIRAC at least on macos which does not allow to use this feature until a new release.

Issues with the job submission#

I get an error like “Data too long for column ‘JobName’ at row 1” or similar

The job name is too long, try to modify it temporarily by editing submit_jobs.py,

in the source code of the interface.

There will be soon an option to modify this at launch time.

I get an error which starts with ‘FileCatalog._getEligibleCatalogs: Failed to get file catalog configuration. Path /Resources/FileCatalogs does not exist or it’s not a section’

This is a Configuration System error which is not fully debugged yet. Check that your dirac.cfg file is correctly edited. In some cases the interface code will re-try to issue some commands in case this happens.